Machine Learning - Machine learning is a branch of artificial intelligence (AI) and computer science which focuses on the use of data and algorithms to imitate the way that humans learn, gradually improving its accuracy.

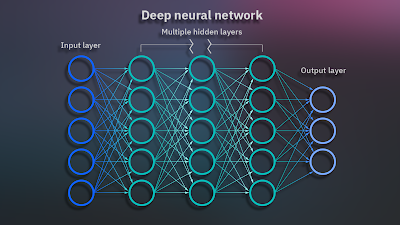

Python Neural Network - Neural networks, also known as artificial neural networks (ANNs) or simulated neural networks (SNNs), are a subset of machine learning and are at the heart of deep learning algorithms. Their name and structure are inspired by the human brain, mimicking the way that biological neurons signal to one another.

Python Training Model - Train/Test is a method to measure the accuracy of your model. It is called Train/Test because you split the the data set into two sets: a training set and a testing set.

Python Deep Learning - Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction. Later the multi-layered approach is described in terms of representation learning and abstraction.

What Are We Going To Do Here?

In Simple language we will teach python to detect who is ♀ and who is ♂ from the following given images. And we will make the entire project in Google Colab.

Change Runtime Type (*in Google Colab)

First Goto - Runtime > Change runtime type > Notebook settings

Change to - Hardware accelerator > GPU [Save]

Gender Data Dropbox Download Datasets

♀♂ Run 1 - Download Gender Data From Dropbox

!wget www.dropbox.com/s/1axwa3bhtr4e369/gender%20detection.zip

♀♂ Run 2 - Extract The Zip File

!unzip "/content/gender detection.zip"

♀♂ Run 3 - Assign Variables To Dataset

Epochs - number times that the learning algorithm will work through the entire training dataset.

Training in Batches. Example 5 images per batch.

validation_data_dir = r"/content/gender_dataset_face/Testing dataset" train_data_dir = r"/content/gender_dataset_face/Training set" # I am taking width and height as 150 img_width, img_height = 150, 150 nb_train_sample = 10 nb_validation_samples = 10 # Epochs - number times that the learning algorithm will work through the entire training dataset. epochs = 50 # Training in Batches. Example 5 images per batch. batch_size = 5

♀♂ Run 4 - Image_Data_Format Parameter In Keras

Keras is a higher-level neural networks library for Python, which is capable of running on top of TensorFlow, CNTK (Microsoft Cognitive Toolkit), or Theano, (and with limited support for MXNet and Deeplearning4j), which Keras refers to as 'Backends'. Keras backend should use is defined in the keras.json file

- located at ~/.keras/keras.json in Linux and Mac OS,

- and at %USERPROFILE%.keras\keras.json on Windows.

The default keras.json file (default set to TensorFlow) would look like this,

{

"epsilon": 1e-07,

"floatx": "float32",

"image_data_format": "channels_last",

"backend": "tensorflow"

}

So, the keras.json for CNTK should look like,

{

"epsilon": 1e-07,

"floatx": "float32",

"image_data_format": "channels_last",

"backend": "cntk"

}

Likewise, the keras,json for Theano would look like this,

{

"epsilon": 1e-07,

"floatx": "float32",

"image_data_format": "channels_first",

"backend": "theano"

}

Why is this image_data_format parameter so important?

The image_data_format parameter affects how each of the backends treat the data dimensions when working with multi-dimensional convolution layers (such as Conv2D, Conv3D, Conv2DTranspose, Copping2D, … and any other 2D or 3D layer). Specifically, it defines where the 'channels' dimension is in the input data.

Both TensorFlow and Theano expects a four dimensional tensor as input. But where TensorFlow expects the 'channels' dimension as the last dimension (index 3, where the first is index 0) of the tensor – i.e. tensor with shape (samples, rows, cols, channels) – Theano will expect 'channels' at the second dimension (index 1) – i.e. tensor with shape (samples, channels, rows, cols). The outputs of the convolutional layers will also follow this pattern.

So, the image_data_format parameter, once set in keras.json, will tell Keras which dimension ordering to use in its convolutional layers.

Mixing up the channels order would result in your models being trained in unexpected ways.

import tensorflow.keras.backend as k if k.image_data_format() == 'channels_first': input_shape = (3, img_width, img_height) else: input_shape = (img_width, img_height, 3)

♀♂ Run 5 - Importing Tensorflow, matplotlib

import tensorflow from tensorflow.keras.preprocessing.image import ImageDataGenerator from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Conv2D, Flatten, Dropout, MaxPooling2D, Activation from tensorflow.keras.preprocessing import image import matplotlib.pyplot as plt import matplotlib.image as mpimg

♀♂ Run 6 - To Get Equal Size Of Every Image While Training The Model

To get equal size of every image while training the model due to difference in resolution in every image.

Now this will generate training generator data. Target size mentioned as image_width and image_height. Batch size of 5. Class mode in binary because here only two classes are there to classify.

# To get equal size of every image while training the model due to difference in resolution in every image. train_datagen = ImageDataGenerator(rescale=1./255, shear_range = 0.2, zoom_range=0.2, horizontal_flip = True) #Now this will generate training generator data. Target size mentioned as image_width and image_height. Batch size of 5. Class mode in binary because here only two classes are there to classify. train_generator = train_datagen.flow_from_directory(train_data_dir, target_size=(img_width, img_height), batch_size= batch_size, class_mode= 'binary', classes = ['men','women'])

>>> Found 1641 images belonging to 2 classes.

♀♂ Run 7 - Same As Above But Testing & Validating Datagen

test_datagen = ImageDataGenerator (rescale=1./255) validation_generator = test_datagen.flow_from_directory(validation_data_dir, target_size = (img_width, img_height), batch_size=batch_size, class_mode='binary')

>>> Found 666 images belonging to 2 classes.

♀♂ Run 8 - Using Matplotlib To View Our Data Which Will Give Out Data In Equal Size

plt.figure(figsize=(12,12)) for i in range(0,15): plt.subplot(5,3,i+1) for X_batch, Y_batch in train_generator: image = X_batch[0] plt.imshow(image) break plt.tight_layout() plt.show()

♀♂ Run 9 - Building Your Model

#This Class allows to create convolutional neural network to extract features from the images model1 = Sequential() model1.add(Conv2D(64,(3,3), input_shape=input_shape)) model1.add(Activation('relu')) #MaxPooling2d reduce the size of the data model1.add(MaxPooling2D(pool_size=(2,2))) #Converts multi dimensional array to 2D channel model1.add(Flatten()) #64 neurons with 3*3 filter model1.add(Dense(64)) #Numbers of output nodes in the hidden layer model1.add(Activation('relu')) #Output layer model1.add(Dense(1)) #sigmoid activition funtion model1.add(Activation('sigmoid')) model1.summary()

>>> Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 148, 148, 64) 1792

activation (Activation) (None, 148, 148, 64) 0

max_pooling2d (MaxPooling2D (None, 74, 74, 64) 0

)

flatten (Flatten) (None, 350464) 0

dense (Dense) (None, 64) 22429760

activation_1 (Activation) (None, 64) 0

dense_1 (Dense) (None, 1) 65

activation_2 (Activation) (None, 1) 0

=================================================================

Total params: 22,431,617

Trainable params: 22,431,617

Non-trainable params: 0

_________________________________________________________________

♀♂ Run 10 - Optimize Your Model

model1.compile(optimizer='rmsprop', loss = 'binary_crossentropy', metrics = ['accuracy']) model1.summary()

>>> Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 148, 148, 64) 1792

activation (Activation) (None, 148, 148, 64) 0

max_pooling2d (MaxPooling2D (None, 74, 74, 64) 0

)

flatten (Flatten) (None, 350464) 0

dense (Dense) (None, 64) 22429760

activation_1 (Activation) (None, 64) 0

dense_1 (Dense) (None, 1) 65

activation_2 (Activation) (None, 1) 0

=================================================================

Total params: 22,431,617

Trainable params: 22,431,617

Non-trainable params: 0

_________________________________________________________________

♀♂ Run 11 - Training Your Model

Run this code more often to Increase Accuracy Of Machine Learning Model.

You can also add your own set of images in dataset.

And to train your model over night without disconnecting google colab just run this hack, copy paste this javascript code to your browser Console and Run

function ClickConnect()

{

console.log("Working");

document.querySelector("colab-connect-button").shadowRoot.getElementById('connect').click();

}

setInterval(ClickConnect,60000);

training = model1.fit(train_generator, steps_per_epoch = nb_train_sample, epochs=epochs, validation_data=validation_generator, validation_steps = nb_validation_samples)

>>> Epoch 1/50 10/10 [==============================] - 11s 68ms/step - loss: 28.8991 - accuracy: 0.4000 - val_loss: 1.6395 - val_accuracy: 0.7400 Epoch 2/50 10/10 [==============================] - 1s 50ms/step - loss: 3.4661 - accuracy: 0.5000 - val_loss: 4.2565 - val_accuracy: 0.1800 ..... Epoch 50/50 10/10 [==============================] - 1s 56ms/step - loss: 0.5074 - accuracy: 0.7800 - val_loss: 0.3892 - val_accuracy: 0.8400

♀♂ Run 12 - Plotting Model Accuracy

import matplotlib.pyplot as plt %matplotlib inline # list all data in training print(training.history.keys()) # summarize training for accuracy plt.plot(training.history['accuracy']) plt.plot(training.history['val_accuracy']) plt.title('model accuracy') plt.ylabel('accuracy') plt.xlabel('epoch') plt.legend(['train','test'], loc='upper left') plt.show() # summarize training for loss plt.plot(training.history['loss']) plt.plot(training.history['val_loss']) plt.title('model loss') plt.ylabel('loss') plt.xlabel('epoch') plt.legend(['train','test'], loc='upper left') plt.show()

♀♂ Run 13 - Download Your Own Image

Download Your Own Image and renaming it as image2.jpg

!wget "https://www.ihnice.com/media-picture/221-400-600-Students%20030rszd.jpg" -O "image2.jpg"

♀♂ Run 14 - View Image In 100X100

#View Image from IPython.display import Image Image('image2.jpg',width=100,height=100)

♀♂ Run 15 - Detect If Our Image Is Of A Man Or Woman

from tensorflow.keras.preprocessing import image from google.colab.patches import cv2_imshow import numpy as np img_path = "/content/image2.jpg" img_pred = image.load_img(img_path, target_size=(150,150)) img_pred = image.img_to_array(img_pred) img_pred = np.expand_dims(img_pred,axis=0) rslt = model1.predict(img_pred) print(rslt) prediction = " " if rslt[0][0] == 1: prediction = "Woman" else: prediction = "Man" print('Prediction: ', prediction)

>>> [[1.]] Prediction: Woman

♀♂ Run 16 - Plotting Gender Data On Your Image

import cv2 img_path = "/content/image2.jpg" image = cv2.imread(img_path) window_name = 'Image' # Font style font = cv2.FONT_HERSHEY_SIMPLEX org = (30, 150) fontScale = 3 color = (255, 0, 0) thickness = 10 image = cv2.putText(image, prediction, org, font, fontScale, color, thickness, cv2.LINE_AA) from google.colab.patches import cv2_imshow #cv2_imshow(image) #Full Size cv2_imshow(cv2.resize(image, (200, 300))) #Resize

Comments

Post a Comment